University of Michigan, Barton Research Group.

Last updated: Feb. 27, 2023

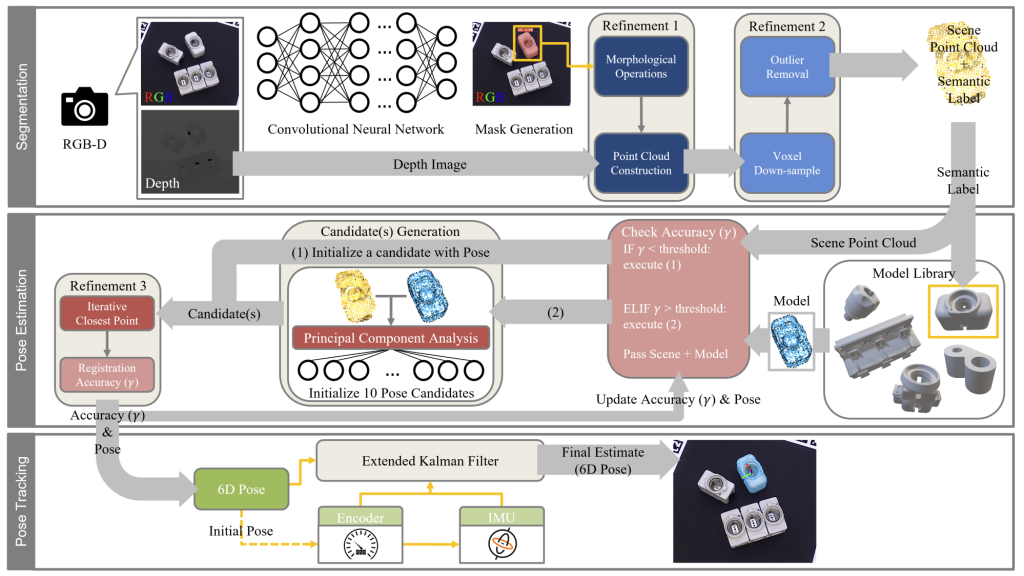

Multi-sensor aided deep pose tracking

Member

- Hojun Lee, Researcher, University of Michigan

- Tyler Toner, PhD student, University of Michigan

- Dawn Tilbury, Professor, University of Michigan

- Kira Barton, Associate Professors, University of Michigan

Link

Abstract

Deep learning has been widely explored to resolve challenges in pose estimation of generic objects. For robots to dynamically interact with their surroundings, accurate pose estimation of nearby objects is crucial. Many recent works have focused on developing neural networks to estimate pose from monocular camera input. However, most of these methods struggle to cope with occlusion and truncation, which are commonly encountered in mobile robotics applications. Occlusion/truncation-robust pose estimation is extremely important in such cases, as mobility of a robot introduces major uncertainty that complicates manipulation. Hence, we present Multi-Sensor aided Deep Pose Tracking (MS-DPT), which uses a Convolutional Neural Network (CNN) to generate pose estimates from a RGB-D camera sensor that is fused with inertial sensor measurements. Our work focuses on improving the pose tracking performance in cases where the target object becomes occluded or truncated as a mobile robot approaches it. The proposed approach accurately tracks textureless objects with high symmetries while operating at 10 FPS during experiments.

Reference

- H. Lee, T. Toner, D. Tilbury, and K. Barton, “Multi-sensor aided deep pose tracking,” in Modeling, Estimation, and Control Conference (MECC), 2022.