University of Bremen, Institute for Artificial Intelligence.

Last updated: Oct. 31, 2022

Teaching robots everyday household activities

Member

- Michael Beetz, Professor, University of Bremen

- Patrick Mania, PhD student, University of Bremen

- Simon Stelter, PhD student, University of Bremen

- Vanessa Hassouna, PhD student, University of Bremen

- Sascha Jongebloed, PhD student, University of Bremen

- Alina Hawkin, PhD student, University of Bremen

Link

- IAI website: https://ai.uni-bremen.de/

- EASE: https://ease-crc.org/

- Suturo project: https://ai.uni-bremen.de/robocupsuturo22

- Github IAI: https://github.com/code-iai/

- Github Suturo: https://github.com/SUTURO

Abstract

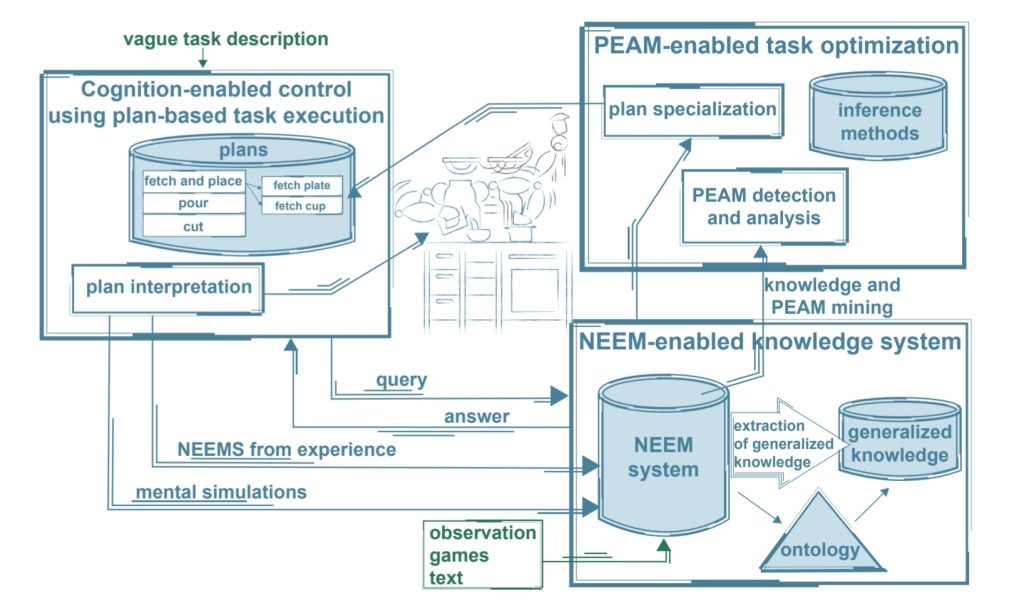

Teaching robots to perform everyday household activities has been the goal of robotics research for a long time. However, it is a difficult challenge since every household environment is different and every robot has different capabilities. In order to tackle this challenge, we propose to teach robots how to accomplish such tasks by utilizing their own experience, Virtual Reality and Simulation. In Virtual Reality a human user can perform every day activities, within a human or robot body. The advantage of using VR is that the environment can be changed a lot easier and faster than in the real world. The same VR environment can also be used for robot simulation, automating data generation. These performances from different sources can all be recorded in order to generate NEEMs – Narrative Enabled Episodic Memories. After the NEEMs have been gathered, various Machine Learning algorithms can be utilized in order to refine and improve the task performance of the robot, teaching it new skills in the process. In order to get more people familiar with this system, the student project SUTURO is working with these frameworks on RoboCup@Home challenges with the HSR robot. The project iterates between bachelor and master students and offers them a glimpse into the world of robotics. In order to make our research further available to the community, we are working on a photorealistic simulation utilizing the Unreal Engine, which includes the environment of our apartment laboratory and the HSR robot. We will provide this simulation environment to others soon, so that everyone interested can download and work with it. We will also provide remote access to the simulated environment so that fewer resources are needed from the user in order to work with this environment.

Reference

- Gayane Kazhoyan, Alina Hawkin, Sebastian Koralewski, Andrei Haidu, Michael Beetz, “Learning Motion Parameterizations of Mobile Pick and Place Actions from Observing Humans in Virtual Environments”, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020. [Link]

- Simon Stelter, Georg Bartels, Michael Beetz, “An open-source motion planning framework for mobile manipulators using constraint-based task space control with linear MPC”, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022.

- Gayane Kazhoyan, Simon Stelter, Franklin Kenghagho Kenfack, Sebastian Koralewski, Michael Beetz, “The Robot Household Marathon Experiment”, 2020. [Link]

- M. Beetz, D. Beßler, A. Haidu, M. Pomarlan, A. K. Bozcuoğlu and G. Bartels, “Know Rob 2.0 — A 2nd Generation Knowledge Processing Framework for Cognition-Enabled Robotic Agents,” 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 512-519, doi: 10.1109/ICRA.2018.8460964. [Link]

- A. Haidu and M. Beetz, “Automated Models of Human Everyday Activity based on Game and Virtual Reality Technology,” 2019 International Conference on Robotics and Automation (ICRA), 2019, pp. 2606-2612, doi: 10.1109/ICRA.2019.8793859. [Link]